The world’s best AI researchers have been paid millions of dollars for their expertise. How much do their research breakthroughs impact AI progress compared to building bigger, more powerful datacenters? Our new paper titled On the Origins of Algorithmic Progress in AI suggests that the literature overestimates the role of algorithmic breakthroughs and underestimates the role of increased computing resources.

The sources of progress in AI capabilities are typically broken down into three distinct components:

Typically, each of these components is treated as orthogonal to the others, such that improvements can be evaluated independently between sources of progress. For example, hardware efficiency (in FLOPs per dollar) improved 45x between 2013 and 2024. Because compute scale and hardware efficiency are considered independent, you can expect your hardware efficiency gains to be roughly that same 45x regardless of whether you have 10 GPUs or 10,000.1

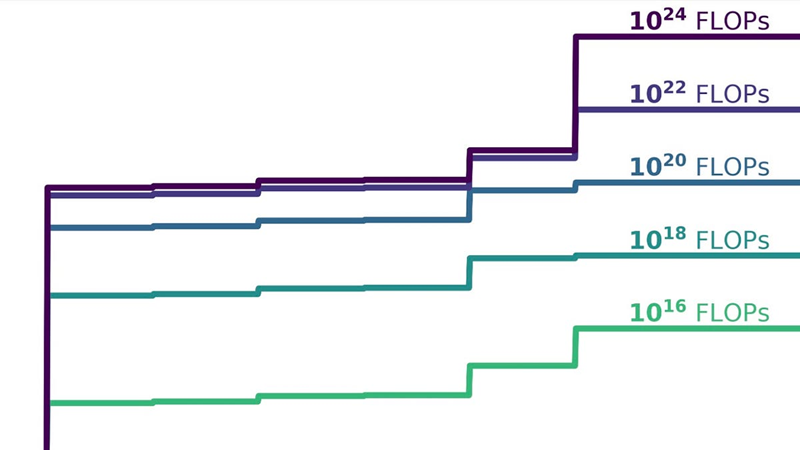

Traditionally, algorithmic efficiency is also thought to be independent of compute scale. Whether you have 1015 FLOPs or 1025 FLOPs, the literature assumes that when researchers discover better training techniques, applying these algorithmic improvements should always yield the same multiplicative efficiency improvements – regardless of compute scale (e.g. 10x less compute at 1015 FLOPs and 1025 FLOPs alike). But is this a safe assumption?